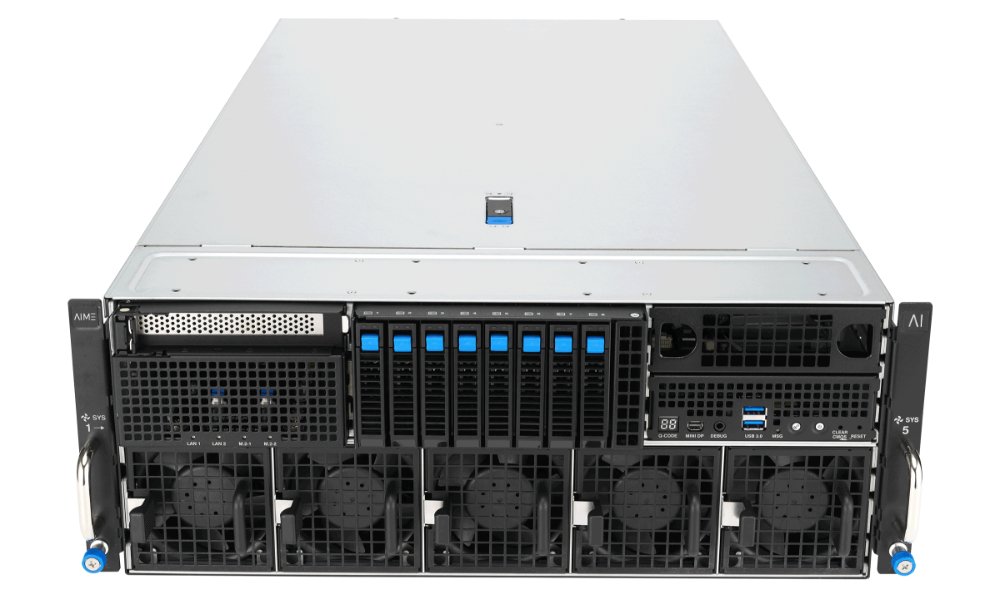

AIME A8005

Multi GPU HPC Rack Server

The AIME A8005 is based on the new ASUS ESC8000A-E13P barebone, powered by two AMD EPYC™ Turin processors, each with up to 192 CPU cores, totaling a CPU performance of up to 768 parallel compute CPU threads, configurable with up to 8 of the most advanced deep learning accelerators and GPUs like the NVIDIA H200 NVL accelerators and the NVIDIA RTX 6000 Pro Blackwell GPUs.

The A8005 is the ultimate multi-GPU server: Dual EPYC Turin CPUs with up to 3 TB DDR5 main memory, up to 240 TB NVME SSD storage with the fastest PCIe 5.0 bus speeds and up to 400 GBE network connectivity.

Built to perform 24/7 for most reliable high performance computing. Either at your inhouse data center, co-location or as a hosted solution.

AIME A8005 - The Deep Learning & Inference Server

The GPU-optimized design of the AIME A8005 with high airflow cooling allows the use of eight high-end double-slot GPUs like the latest NVIDIA H200 NVL, NVIDIA H100 NVL, RTX Pro 6000 Blackwell, NVIDIA L40S, RTX 6000 Ada, and RTX 5000 Ada GPU models.

Definable GPU Configuration

Choose the desired configuration among the most powerful NVIDIA GPUs for Deep Learning and large language model inference:

Up to 8x NVIDIA H200 141GB

The NVIDIA Hopper H200 generation, the powerful NVIDIA processor with the highest compute density. The NVIDIA H200 141GB is the direct successor of the H100 80GB and is based on the GH-100 processor in 5nm manufacturing with 16.896 CUDA cores, fourth-generation Tensor cores and 141 GB HBM3e memory with data transfer rates of 4.8 TB/s.

Additional up to 4x NVIDIA H200 can be switched via a NVLINK connector with data transfer rates of 900 GB/s.

The NVIDIA H200 NVL is currently the most powerful deep learning accelerator card available.

Up to 8x NVIDIA H100 NVL 94GB

An update to the NVIDIA Hopper H100 80GB generation is the NVIDIA H100 NVL. The NVIDIA H100 NVL is based on the GH-100 processor in 5nm manufacturing with 16.896 CUDA cores, fourth-generation Tensor cores and 94 GB HBM3 memory with data transfer rates of 3.9 TB/s. A single NVIDIA H100 NVL accelerator delivers 1,67 peta-TOPS fp16 performance. Eight accelerators of this type add up to more than 8.000 teraFLOPS fp32 performance. The NVIDIA H100 generation is the first card to support PCIe 5.0 which doubles the total PCIe data transfer rates to up to 128 GB/s. The NVIDIA H100 NVL is currently the most efficient (perf/watt) deep learning accelerator card available.

Up to 8x NVIDIA RTX Pro 6000 Blackwell Server 96GB

The first GPU of NVIDIAs next generation Blackwell is the RTX Pro 6000 Server edition with unbeaten 24.064 CUDA and 752 Tensor cores of the 5th generation.

With the memory increase to 96 GB DDR7 GPU memory with an impressive 1.6 TB/s memory bandwidth, it is the inception of a new standard in GPU computing.

Up to 8x NVIDIA L40S 48GB

The NVIDIA L40S is built on the latest NVIDIA GPU architecture: Ada Lovelace. It is the direct succesor of the RTX A40 and the passive cooled version of the RTX 6000 Ada. The L40S combines 568 fourth-generation Tensor Cores, and 18.176 next-gen CUDA® cores with 48GB GDDR6 graphics memory for strong rendering, AI, graphics, and compute performance.

Up to 8x NVIDIA RTX 6000 Ada 48GB

The RTX ™ 6000 Ada is built on the latest NVIDIA GPU architecture: Ada Lovelace. It is the direct succesor of the RTX A6000 and the Quadro RTX 6000. The RTX 6000 Ada combines 568 fourth-generation Tensor Cores, and 18.176 next-gen CUDA® cores with 48GB of graphics memory for strong rendering, AI, graphics, and compute performance.

Up to 8x NVIDIA RTX 5000 Ada 32GB

The RTX ™ 5000 Ada is built on the latest NVIDIA GPU architecture: Ada Lovelace. It is the direct succesor of the RTX A5000/A5500 and the Quadro RTX 6000. The RTX 5000 Ada combines 400 fourth-generation Tensor Cores, and 12.800 next-gen CUDA® cores with 32GB of graphics memory for a convincing rendering, AI, graphics, and compute performance.

All NVIDIA GPUs are supported by NVIDIA’s CUDA-X AI SDK, including cuDNN, TensorRT, which power nearly all popular deep learning frameworks.

Dual EPYC Turin CPU Power

The latest dual AMD EPYC Turin server CPUs with support for DDR5 5600 MHz and PCIe 5.0 deliver up to 2x 196 CPU cores with a total of 768 CPU threads. Insane CPU power with an unbeaten price-performance ratio.

The available 2x 128 PCI 5.0 CPU lanes of the AMD EPYC CPU allow the highest interconnect and data transfer rates between the CPU and the GPUs and ensure that all GPUs are connected with full x16 PCI 5.0 bandwidth.

A large number of available CPU cores can improve performance dramatically when the CPU is used for preprocessing and delivering data to optimally feed the GPUs with workloads.

Up to 8x 30 TB Direct PCIe 5.0 NVMe SSD Storage

Deep Learning is most often linked to a high amount of data to be processed and stored. High throughput and fast access times to the data are essential for fast turnaround times.

The AIME A8005 can be configured with up to eight exchangeable U.3 PCIe 5.0 NVMe triple-level cell (TLC) SSDs with a capacity of up to 30.36 TB each, adding up to a total capacity of 240 TB of the fastest NVMe SSD storage.

Since each of the SSDs is connected to the main memory via PCI 5.0 lanes, they achieve consistently high read and write rates of more than 5000 MB/s.

As usual in the server sector, the SSDs have an MTBF of 2,500,000 hours with 1 DWPD (Disk Write per Day) and a 5-year manufacturer's guarantee.

Optional Hardware RAID: Up to 60 TB NVMe SSD Storage

In case hardware RAID storage is required. The A8005 offers the option to use six of its drive bays with a hardware-reliable RAID configuration. Up to 60 TB fastest NVMe RAID SSD storage with RAID levels 0 / 1 / 5 / 10 and 50.

High Connectivity and Management Interface

The A8005 is fitted with onboard 2x 10 Gbit/s RJ45+ LAN ports and can be equiped with up to 400 Gbit/s (GBE) network adapters for the highest interconnect to NAS resources and big data collections. Also, for data interchange in a distributed computing cluster, the highest available LAN connectivity is a must-have.

The AIME A8005 is completely remote manageable through ASMB12-iKVM IPMI/BMC, powered by AST2600, which makes a successful integration of the AIME A8005 into larger server clusters possible.

Optimized for Multi GPU Server Applications

The AIME A8005 offers energy efficiency with redundant Titanium grade power supplies, which enable long-time fail-safe operation.

Its thermal control technology provides more efficient power consumption for large-scale environments.

All setup, configured, and tuned for perfect Multi GPU and deep learning performance by AIME.

The AIME A8005 comes with preinstalled Ubuntu OS configured with the latest drivers and frameworks like Tensorflow, Keras, and PyTorch. Ready after boot up to start right away to accelerate your deep learning applications.

Technical Details AIME A8005

| Type | Rack Server 4U, 90cm depth |

| CPU (configurable) |

EPYC Turin 2x EPYC 9135 (16 cores, Turin, 3.65 / 4.30 GHz) 2x EPYC 9255 (24 cores, Turin, 3.20 / 4.30 GHz) 2x EPYC 9355 (32 cores, Turin, 3.55 / 4.40 GHz) 2x EPYC 9455 (48 cores, Turin, 3.15 / 4.40 GHz) 2x EPYC 9555 (64 cores, Turin, 3.20 / 4.40 GHz) 2x EPYC 9655 (96 cores, Turin, 2.60 / 4.50 GHz) 2x EPYC 9755 (128 cores, Turin, 2.70 / 4.10 GHz) 2x EPYC 9845 (160 cores, Turin, 2.10 / 3.70 GHz) 2x EPYC 9965 (192 cores, Turin, 2.25 / 3.70 GHz) |

| RAM | 256 / 512 / 1024 / 1536 / 2048 / 2304 / 3072 GB DDR5 5600 MHz ECC memory |

| GPU Options |

1 to 8x NVIDIA H200 NVL 141GB or 1 to 8x NVIDIA H100 NVL 94GB or 1 to 8x NVIDIA RTX PRO 6000 Blackwell 96GB or 1 to 8x NVIDIA RTX L40S 48GB or 1 to 8x NVIDIA RTX 6000 Ada 48GB or 1 to 8x NVIDIA RTX 5000 Ada 32GB or 1 to 8x NVIDIA RTX 4500 Ada 24GB |

| Cooling | GPUs are cooled with an air stream provided by 8 high performance temperature controlled fans > 100000h MTBF CPUs and mainboard are cooled with an air stream provided by 5 independet high performance temperature controlled fans > 100000h MTBF |

| Storage |

Up to 4 TB built-in M.2 NVMe PCIe 5.0 SSD (optional) Up to 8x 30.72 TB U.3 NVMe PCIe 5.0 SSD Tripple Level Cell (TLC) quality 10000 MB/s read, 4900 MB/s write MTBF of 2,500,000 hours and 5 years manufacturer's warranty with 1 DWPD Optional Broadcom Hardware RAID: Up to 6x SSD 7.68 TB SATA RAID 0/1/5/10 or Up to 6x SSD 3.84 TB NVMe RAID 0/1/5/10 or Up to 6x SSD 7.68 TB NVMe RAID 0/1/5/10 or Up to 6x SSD 15.36 TB NVMe RAID 0/1/5/10 |

| Network |

Onboard 1x IPMI Managment LAN 2x 10 GBE LAN RJ45 Optional 2x 10 GBE LAN SFP+ or 2x 25 GBE LAN SFP28 or 1x 100 GBE QSFP28 (ConnectX-5) or 2x 100 GBE QSFP28 (ConnectX-5) or 1x 200 GBE QSFP56 (ConnectX-6) or 1x 400 GBE OSFP (ConnectX-7) |

| USB |

2x USB 3.2 Gen 1 ports (front) 1x USB 3.2 Gen 1 ports (back) |

| Management solutions |

Onboard ASMB12-iKVM Mini DisplayPort |

| PSU | 3+1 3200W redundant power 80 PLUS Titanium certified (96% efficiency) |

| Noise-Level | 92dBA |

| Dimensions (WxHxD) | 440mm x 175mm (4U) x 800mm

17.3" x 6.9" x 31.5" |

Operating Environment | Operation temperature: 10℃ ~ 35℃

Non operation temperature: -40℃ ~ 60℃ |