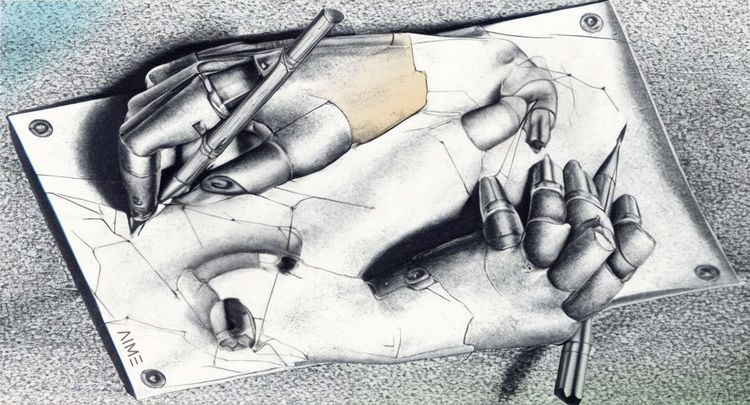

AIME API - The Scalable AI Model Inference Solution

You have a deep learning model running in your console or Jupyter notebook and would like to make it available to your company or deploy it to the world? AIME API is the easy and scalable solution to do so.